Within our company, we are used to doing web scraping with python and selenium. But we have encountered an obstacle 2 Gb of memory are not enough when you open more than 30 tabs automatically with the chromium. The machine becomes very slow due to lack of memory.

¿What do we do?, ¿buy a machine with more than 2 GB of memory?

In our opinion "NO". It is an additional investment and generate more garbage for the planet with the equipment that is becoming obsolete. Now here we have an orange pi plus 2e (opi) that is always on. Getting an opi with 4 GB of memory would be quite expensive. So Emacs comes to our rescue, so that our 2 Gb of memory is more than enough. We had already stopped using w3m and we were using eww (another browser within the most recent Emacs ), but the w3m email list started to move again with new ideas, dusting off characteristics already developed over more than 20 years of development of w3m, and the truth that we have been surprised again by the versatility of w3m.

Some facts that we should also know:

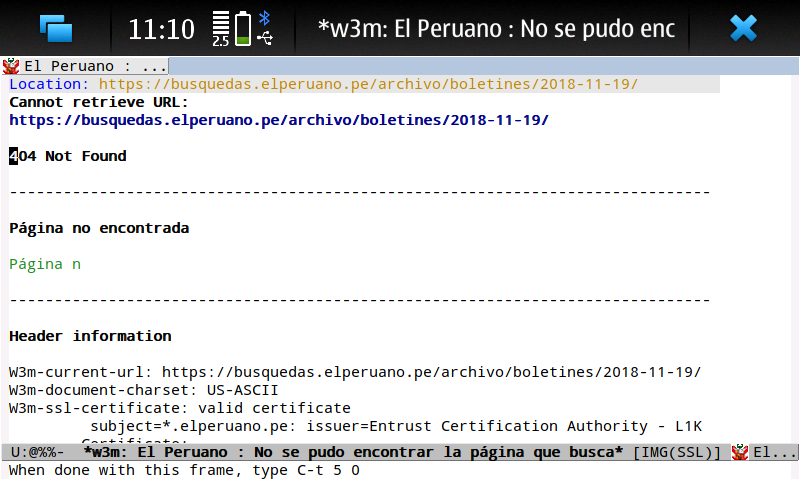

- It is likely that the server of the pdf's that we will be opening with our web scraping with Emacs + w3m, is not prepared to receive more than 30 queries simultaneously, since more than once the server has been dropped, which forced us to mask the user-agent of w3m, in case they want to filter us using that mechanism.

- The aforementioned, to open more than 30 tabs in chromium requires more memory than our orange pi gives us.

- The website from which we will collect information receives a set of requirements for publication of notices (it is a newspaper), for which the clients of the newspaper only send an image within a pdf. In such a way that to see the pdf [the information that the client wishes to publish] requires to click on a link.

How do we web scraping with Emacs and w3m?

This is what we need to do:

- Filter only journal publications, which are only images within a pdf. We will use occur.

- With the list of all the items that match (they are image inside pdf). We will go through the web page again, to locate the url of each coincidence or better said of each document.

- Then open each of the urls of the documents in the w3m inside the Emacs .

Showing is code that meets the requirements.

;-*- mode: emacs-lisp; encoding: utf-8; -*- (when (boundp 'w3m-display-hook) (setq w3m-user-agent-site-specific-alist '(("^https?://busquedas\\.elperuano\\.pe" . "Mozilla/5.0 (Linux; U; Android 4.0.3; ko-kr; LG-L160L Build/IML74K) AppleWebkit/534.30 (KHTML, like Gecko) Version/4.0 Mobile Safari/534.30"))) ; https://emacs.stackexchange.com/questions/19686/how-to-use-pdf-tools-pdf-view-mode-in-emacs (defvar my-w3m-avisa-peruano-occur-lst '() "harvested matchs from occur") (defvar my-w3m-avisa-peruano-url-lst '() "harvested url's that match the query") (defun sunshavi/w3m-avisa-peruano (url) "get the links from pdfs that are just images. " (when (string-match (base64-decode-string "aHR0cHM6Ly9idXNxdWVkYXMuZWxwZXJ1YW5vLnBlL2FyY2hpdm8vYm9sZXRpbmVzLw==") url) (occur-1 " 002-.* Firmado Digitalmente por" (- 2 2) (list (current-buffer))) (when (get-buffer "*Occur*") (setq my-w3m-avisa-peruano-occur-lst '()) (setq my-w3m-avisa-peruano-url-lst '()) (let ( (num-lines (count-lines (point-min) (point-max))) ; line counting (counter 1) (my-w3m-buffer (current-buffer)) ) (with-current-buffer (get-buffer "*Occur*") (goto-char (point-min)) (end-of-line) (forward-line) (while ( < counter num-lines) ;iterate lines (back-to-indentation) (search-forward "002" nil t) (backward-word) (let ((beg (point))) (search-forward " " nil t) (backward-char 1) (if (> (length (buffer-substring-no-properties beg (point))) 3) (add-to-list 'my-w3m-avisa-peruano-occur-lst (buffer-substring-no-properties beg (point)))) ) (forward-line) (setq counter (+ 1 counter))) ) (delete-other-windows) (when (> (length my-w3m-avisa-peruano-occur-lst) 0) ;use the matches list to fill the url's list (with-current-buffer my-w3m-buffer (goto-char (point-max)) (dolist (my-item my-w3m-avisa-peruano-occur-lst) (search-backward my-item nil t) (end-of-line) (search-forward "Descarga individual" nil t) (end-of-line) (backward-char 1) ;"Descarga individual" (add-to-list 'my-w3m-avisa-peruano-url-lst (w3m-active-region-or-url-at-point))) ) ) (when (> (length my-w3m-avisa-peruano-url-lst) 0) ; fill a temp buffer and evaluate it (with-temp-buffer (insert "(progn\n") (dolist (my-item-3 my-w3m-avisa-peruano-url-lst) (insert "(w3m-browse-url \"") (insert my-item-3) (insert "\" t)\n")) (insert ")") (eval-buffer)) ) ) ))) (add-hook 'w3m-display-hook 'sunshavi/w3m-avisa-peruano) (defun sunshavi/w3m-lambda-confirm-page-load() (if (y-or-n-p "Do You want to process documents for today?") (progn (w3m-browse-url (concat (base64-decode-string "aHR0cHM6Ly9idXNxdWVkYXMuZWxwZXJ1YW5vLnBlL2FyY2hpdm8vYm9sZXRpbmVzLw==") (format-time-string "%Y-%m-%d") "/") t) ) ) ) )

Showing the bash alias

alias myemacs="emacs --no-bitmap-icon -Q --eval \" (progn (defvar my-cli-not-init-pkgs-flag t \\\"my emacs var, indicates how this emacs was started\\\")(load \\\"~/.emacs.d/init.el\\\"))\""

Conclusion

Emacs + w3m allow us to efficiently web scraping. They also allow us to complete tasks that with conventional tools would require more modern hardware and therefore more expensive, also allow us to avoid further disbursements.

Note

The content generating company that published the website, from which we were extracting information, has stopped publishing the web address of its files and now only publishes a pdf with the same information, we are downloading the pdf and viewing it with pdf-tools, we would need to adapt the occurrence within pdf-tools to later with each coincidence start looking for each pdf. We thank Andreas Politz the creator of pdf-tools for such a good and effective tool.

Last change: 21.12.2018 02:31 |